UNIT 2 PEOPLE AND TECHNOLOGY

LEARNING AREA: ORAL AND WRITTEN COMMUNICATION

Key Unit Competence:To use language learnt in the context of people and technology.

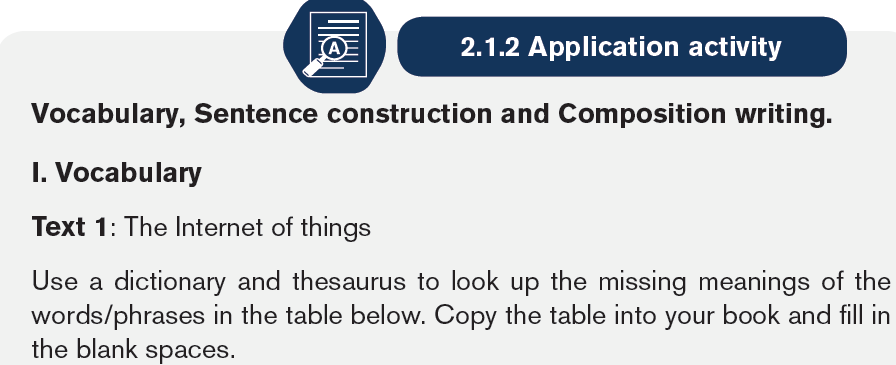

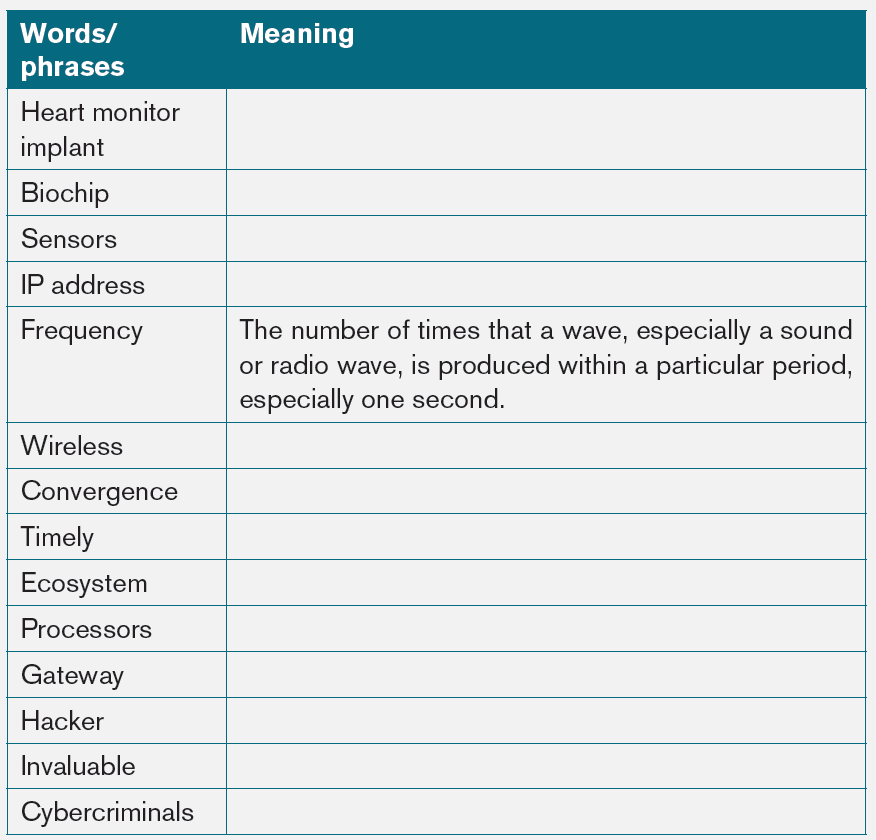

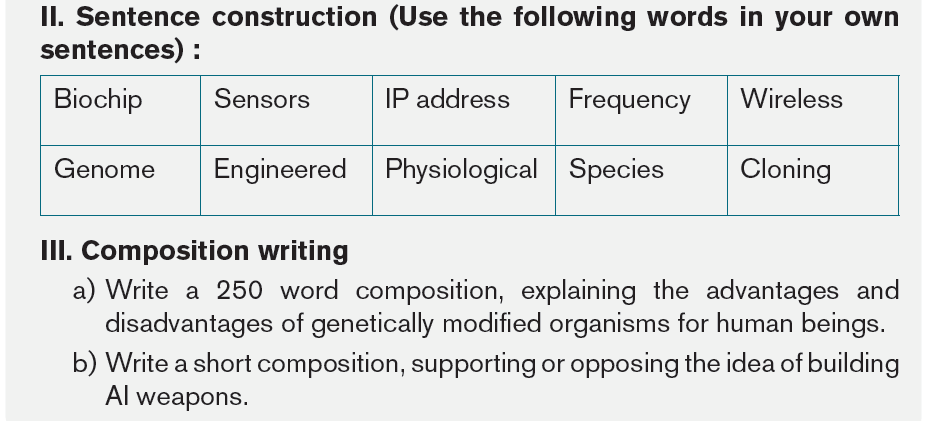

The Internet of things, or IoT, is a system of interrelated computing devices,

mechanical and digital machines, objects, animals or people that are provided

with unique identifiers (UIDs) and the ability to transfer data over a network

without requiring human-to-human or human-to-computer interaction. A thing in

the internet of things can be a person with a heart monitor implant, a farm animal

with a biochip transponder, an automobile that has built-in sensors to alert the

driver when tire pressure is low or any other natural or man-made object that

can be assigned an IP address and is able to transfer data over a network.

The term “the internet of things” was first mentioned in 1999 by Kevin Ashton,

co-founder of the Auto-ID Center at Massachusetts Institute of Technology

(MIT) in a presentation he made to Procter & Gamble (P&G). Wanting to bring

radio frequency ID (RFID) to the attention of P&G’s senior management, Ashton

called his presentation “Internet of Things” to incorporate the cool new trend of

1999: the Internet. MIT professor Neil Gershenfeld’s book, When Things Start

to Think, also appearing in 1999, didn’t use the exact term but provided a clear

vision of where IoT was headed.

IoT has evolved from the convergence of wireless technologies, micro-electromechanical

systems (MEMS), micro services and the internet. The convergence

has helped tear down the silos between operational technology (OT) and

information technology (IT), enabling unstructured machine-generated data to

be analysed for insights to drive improvements.

The internet of things is also a natural extension of SCADA (supervisory control

and data acquisition), a category of software application program for process

control and gathering of data in real time from remote locations to control

equipment and conditions. SCADA systems include hardware and software

components. The hardware gathers and feeds data into a computer that has

SCADA software installed, where it is then processed and presented in a timely

manner. The evolution of SCADA is such that late-generation SCADA systems

developed into first-generation IoT systems.

The concept of the IoT ecosystem, however, didn’t really come into its own

until the middle of 2010 when, in part, the government of China said it would

make IoT a strategic priority in its five-year plan. An IoT ecosystem consists

of web-enabled smart devices that use embedded processors, sensors and

communication hardware to collect, send and act on data they acquire from their

environments. IoT devices share the sensor data they collect by connecting to

an IoT gateway or other edge device where data is either sent to the cloud to

be analysed or analysed locally.

Sometimes, these devices communicate with other related devices and act on

the information they get from one another. The devices do most of the work

without human intervention, although people can interact with the devices -- for

instance, to set them up, give them instructions or access the data.

The internet of things connects billions of devices to the internet and involves

the use of billions of data points, all of which need to be secured. Due to its

expanded attack surface, IoT security and privacy are cited as major concerns.

Because IoT devices are closely connected, all a hacker has to do is to exploit

one vulnerability to manipulate all the data, rendering it unusable. Additionally,

connected devices often ask users to input their personal information, including

names, ages, addresses, phone numbers and even social media accounts --

information that’s invaluable to hackers. Manufacturers that don’t update their

devices regularly -- or at all -- leave them vulnerable to cybercriminals.

However, hackers aren’t the only threat to the internet of things; privacy is

another major concern for IoT users. For instance, companies that make and

distribute IoT devices could use those devices to obtain and sell users’ personal

data. Beyond leaking personal data, IoT poses a risk to critical infrastructure,

including electricity, transportation and financial services.

Adapted from Internet of things (IoT) by Margaret Rouse.

•• Comprehension questions

1. What do you understand by “the Internet of things”?

2. In the phrase “the Internet of things” what can a thing be referred to?

3. Appreciate the contribution of Neil Gershenfeld’s book to the creation of

the term “the Internet of things”?

4. Evaluate the relationship between SCADA and Internet of things.

5. What do you understand by “IoT ecosystem”?

6. Using an example, explain when people can interact with the IoT devices.

7. Account for the need to secure Internet of things devices.

Text 2: Genetically modified organism (GMO)

Genetically modified organism (GMO) is an organism whose genome has

been engineered in the laboratory in order to favour the expression of desired

physiological traits or the generation of desired biological products. In

conventional livestock production, crop farming, and even pet breeding, it has

long been the practice to breed selected individuals of a species in order to

produce offspring that have desirable traits. In genetic modification, however,

recombinant genetic technologies are employed to produce organisms whose

genomes have been precisely altered at the molecular level, usually by the

inclusion of genes from unrelated species of organisms that code for traits that

would not be obtained easily through conventional selective breeding.

Genetically modified organisms (GMOs) are produced using scientific

methods that include recombinant DNA technology and reproductive cloning.

In reproductive cloning, a nucleus is extracted from a cell of the individual to

be cloned and is inserted into the enucleated cytoplasm of a host egg (an

enucleated egg is an egg cell that has had its own nucleus removed). The

process results in the generation of an offspring that is genetically identical

to the donor individual. The first animal produced by means of this cloning

technique with a nucleus from an adult donor cell (as opposed to a donor

embryo) was a sheep named Dolly, born in 1996. Since then a number of

other animals, including pigs, horses, and dogs, have been generated by

reproductive cloning technology. Recombinant DNA technology, on the other

hand, involves the insertion of one or more individual genes from an organism of

one species into the DNA (deoxyribonucleic acid) of another. Whole-genome

replacement, involving the transplantation of one bacterial genome into the cell

body, or cytoplasm, of another microorganism, has been reported, although this

technology is still limited to basic scientific applications.

Genetically modified (GM) foods were first approved for human consumption in

the United States in 1994, and by 2014–15 about 90 percent of the corn, cotton,

and soybeans planted in the United States were genetically modified. By the

end of 2014, GM crops covered nearly 1.8 million square kilometres (695,000

square miles) of land in more than two dozen countries worldwide. The majority

of GM crops were grown in the Americas.

In agriculture, plants are genetically modified for a number of reasons. One of

the reasons is to reduce the use of chemical insecticides. For example, the

application of wide-spectrum insecticides declined in many areas growing

plants, such as potatoes, cotton, and corn, that were endowed with a gene from

the bacterium Bacillus thuringiensis, which produces a natural insecticide

called Bt toxin. Farmers who had planted Bt cotton reduced their pesticide use

by 50–80 percent and increased their earnings by as much as 36 percent.

Other GM plants were engineered for a different reason: resistance to a specific

chemical herbicide, rather than resistance to a natural predator or pest. Herbicide

Resistant Crops (HRC) enable effective chemical control of weeds, since only

the HRC plants can survive in fields treated with the corresponding herbicide.

Many HRCs are resistant to glyphosate (Roundup), enabling liberal application

of the chemical, which is highly effective against weeds. Such crops have been

especially valuable for no-till farming, which helps prevent soil erosion. However,

because HRCs encourage increased application of chemicals to the soil, they

remain controversial with regard to their environmental impact.

Some other plants can be genetically modified to increase their nutrients. The

example of a crop that is genetically modified for that reason is “golden rice”.

Golden rice was genetically modified to produce almost 20 times the betacarotene

of previous varieties. A variety of other crops, modified to endure the

weather extremes common to other parts of the globe, are also in production.

To sum up, GMOs produced through genetic technologies have become a part

of everyday life, entering into society through agriculture, medicine, research,

and environmental management. However, while GMOs have benefited human

society in many ways, some disadvantages exist; therefore, the production of

GMOs remains a highly controversial topic in many parts of the world.

Adapted from Genetically modified organism (GMO), by Judith L. Fridovich-Keil and Julia M.

Diaz.

•• Comprehension questions :

1. In your own words, define the term “Genetically Modified Organism”.

2. State two of scientific methods used to produce Genetically Modified

Organisms.

3. Differentiate recombinant DNA technology from reproductive cloning.

4. What do you think caused 90 % of the corn, cotton

and soybeans planted in the United States to be genetically modified,

only 10 years after the approval of GM food?

5. Give three reasons why plants are genetically modified.

6. Explain the disadvantage of genetically modifying plants in favour of

resistance to a specific chemical herbicide.

Text 3: AI (Artificial Intelligence)

Artificial intelligence (AI) is the simulation of human intelligence processes by

machines, especially computer systems. These processes include learning

(the acquisition of information and rules for using the information), reasoning

(using rules to reach approximate or definite conclusions) and self-correction.

Cambridge Advanced Learner’s Dictionary defines AI as the study of how to

produce machines that have some of the qualities that the human mind has,

such as the ability to understand language, recognize pictures, solve problems

and learn. Particular applications of AI include expert systems, speech

recognition and machine vision.

AI can be categorized as either weak or strong. Weak AI, also known as narrow

AI, is an AI system that is designed and trained for a particular task. Virtual

personal assistants, such as Apple’s Siri, are a form of weak AI. Strong AI, also

known as artificial general intelligence, is an AI system with generalized human

cognitive abilities. When presented with an unfamiliar task, a strong AI system

is able to find a solution without human intervention.

Arend Hintze, an assistant professor of integrative biology, computer science

and engineering at Michigan State University, categorizes AI into four types,

from the kind of AI systems that exist today to sentient systems, which do not

yet exist. His categories are: Reactive machines, limited memory, theory of mind

and Self-awareness.

A reactive machine is the most basic type that is unable to form memories

and use past experiences to inform decisions. They can’t function outside the

specific tasks that they were designed for. They simply perceive the world and

react to it. An example is Deep Blue, the IBM chess program that beat Garry

Kasparov in the 1990s. Deep Blue can identify pieces on the chess board and

make predictions, but it has no memory and cannot use past experiences to

inform future ones. It analyses possible moves -- its own and its opponent --

and chooses the most strategic move.

Limited memory refers to AI systems that can use past experiences to inform

future decisions. Some of the decision-making functions in self-driving cars

are designed this way. Observations inform actions happening in the not-sodistant

future, such as a car changing lanes. These observations are not storedpermanently.

The third type of Artificial intelligence is Theory of mind. This psychology

term refers to the understanding that others have their own beliefs, desires

and intentions that impact the decisions they make. The AI of this type should

be able to interact socially with human beings. Even though there are a lot ofimprovements in this field, this kind of AI does not yet exist.

Self-awareness is an AI that has its own conscious, super intelligent, selfawareness

and sentient. In brief, it is a complete human being. Machines with

self-awareness understand their current state and can use the information to

infer what others are feeling. This type of AI does not yet exist and if achieved, itwill be the milestones in the field of AI.

Some people fear that machines may turn evil and destroy human beings if they

are equipped with feelings and emotions. The real worry isn’t malevolence, but

competence. A super intelligent AI is by definition very good at attaining its

goals, whatever they may be, so we need to ensure that its goals are aligned with

ours. The answer to the question of whether AI can be dangerous to mankind

is that there’s reason to be cautious, but that the good can outweigh the bad ifmanaged properly as believes Bill Gates the co-founder of Microsoft.

Adapted from AI in IT tools promises better, faster, stronger op, by Margaret Rouse.

•• Comprehension questions :

1. In your own words, define the term “Artificial Intelligence”.

2. Differentiate Weak AI from Strong AI.

3. Outline the four types of AI as mentioned in the passage.

4. Compare and contrast limited memory and theory of mind.

5. Should human being worry about the self-awareness AI? Explain.

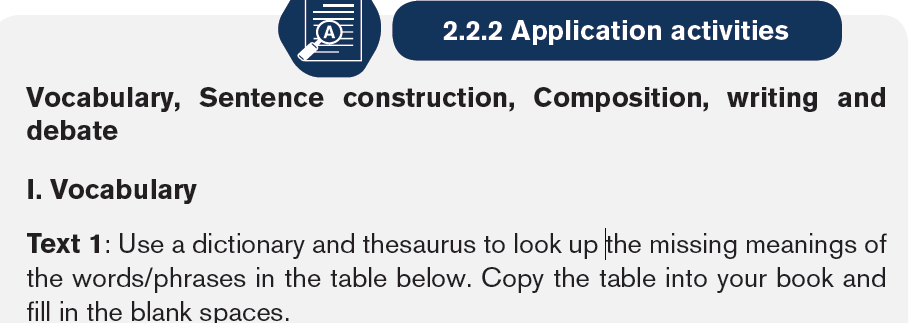

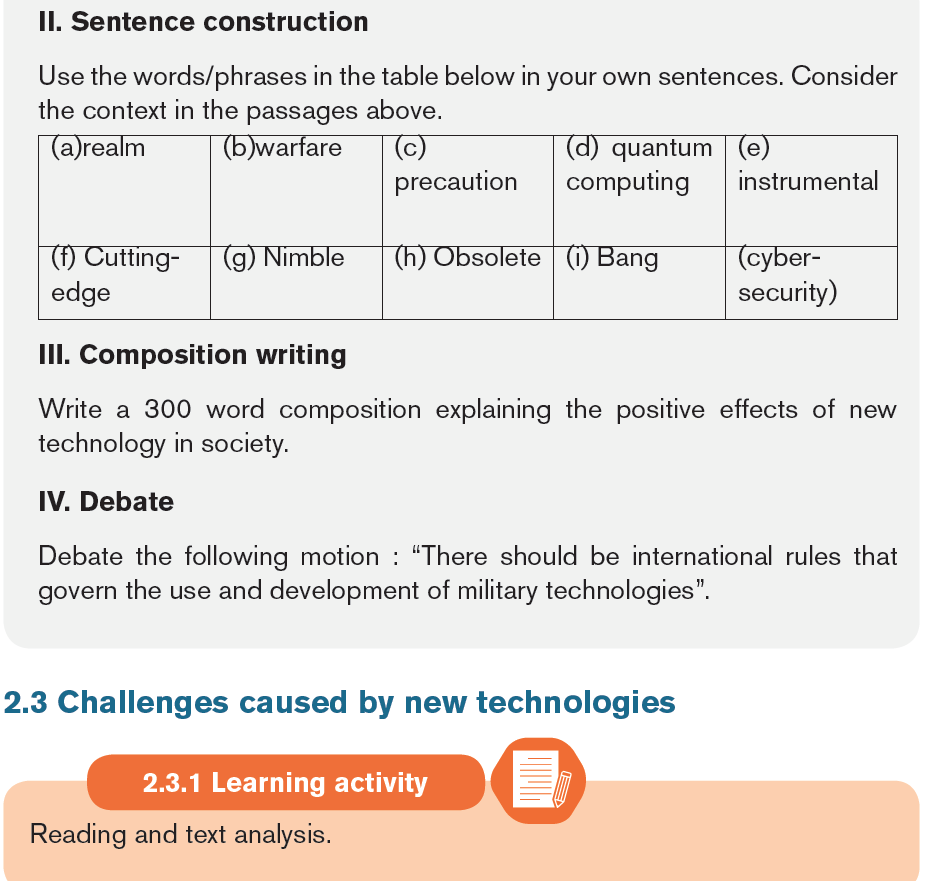

Text 1: The advantages of new technology for businesses

Cutting-edge technology can create high benefits for businesses that are willing

to be early adopters. This strategy, however, requires businesses to abandon

technologies that never fully mature or that are themselves dropped by their

parent companies. A nimble implementation strategy allows entrepreneurs

to realize the benefits of new technologies while avoiding business workflow

issues when a technology cannot survive in the marketplace. The advantages

include: being a key to penetrating a market, revolutionising operations and

reducing costs.

For a small business, a technology should not be evaluated on its own merits

but rather for the ways its implementation will allow your business to accomplish

things that are impossible for your competitors. It does not matter if a technology

speeds up your manufacturing process by 20 percent unless that speed is a key

to penetrating a market that you cannot otherwise reach.

A new technology that is disruptive to the overall marketplace, but that will give

you the first-to-market advantage, is the best new process to consider.

New technology should help us to revolutionise old operations. Most businesses,

like most organizations, tend at first to use new technologies in very similar ways

to the older ones that they replaced. For example, a cell phone is not simply

a wireless landline phone -- it is also a device for rescheduling meetings on

the fly, arranging for impromptu visits, surfing on internet, avoiding congested

traffic etc. Companies that saw mobile communications for these abilities had

an immediate jump on companies that are still organized around older telephone

paradigms when cell phones gained widespread use. When considering a new

technology, make an explicit list of underlying assumptions in your business

model -- then see if the technology makes any of them obsolete.

Paradoxically, new technologies can be both a major source of expenses for

your business, as well as a method of eradicating your biggest costs. Regular

implementation of technology on the cutting edge means that sometimes you

will need to abandon your investment: if the technology fails to work, if it is

defeated by its competition or if its parent company folds. On the other hand,

some technologies completely change the cost structure for the service they

provide: Skype, for example, provides an inexpensive service that replaces both

international phone calls and videoconferencing, which previously could cost

thousands of dollars annually. Focus on the areas where you will see the biggest

bang for your technology buck if a new technology succeeds -- but be ready to

abandon the cutting edge if it cannot deliver on these promises

Adapted from The Advantages of New Technology for Businesses, by Ellis Davidson.

•• Comprehension questions :

1. Which businesses are likely to benefit from cutting-edge technology?

2. What do you understand by “technologies that are themselves dropped

by their parent companies”?

3. Outline at least three advantages of using new technologies in business.

4. Explain thoroughly how one should evaluate the success of new

technology in a small business.

5. Using an example, explain how new technology should help us to

revolutionise old operations.

6. Can technology be a major source of expenses for your business?

Explain.

7. Under which circumstances are businessmen advised to abandon thecutting edge technology?

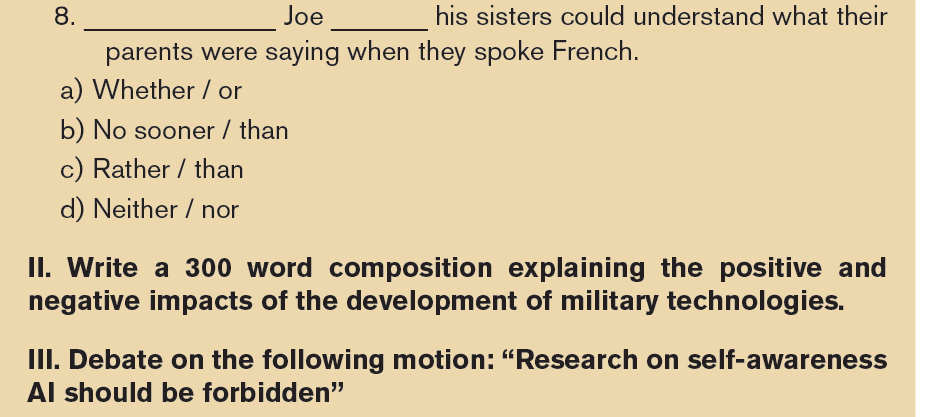

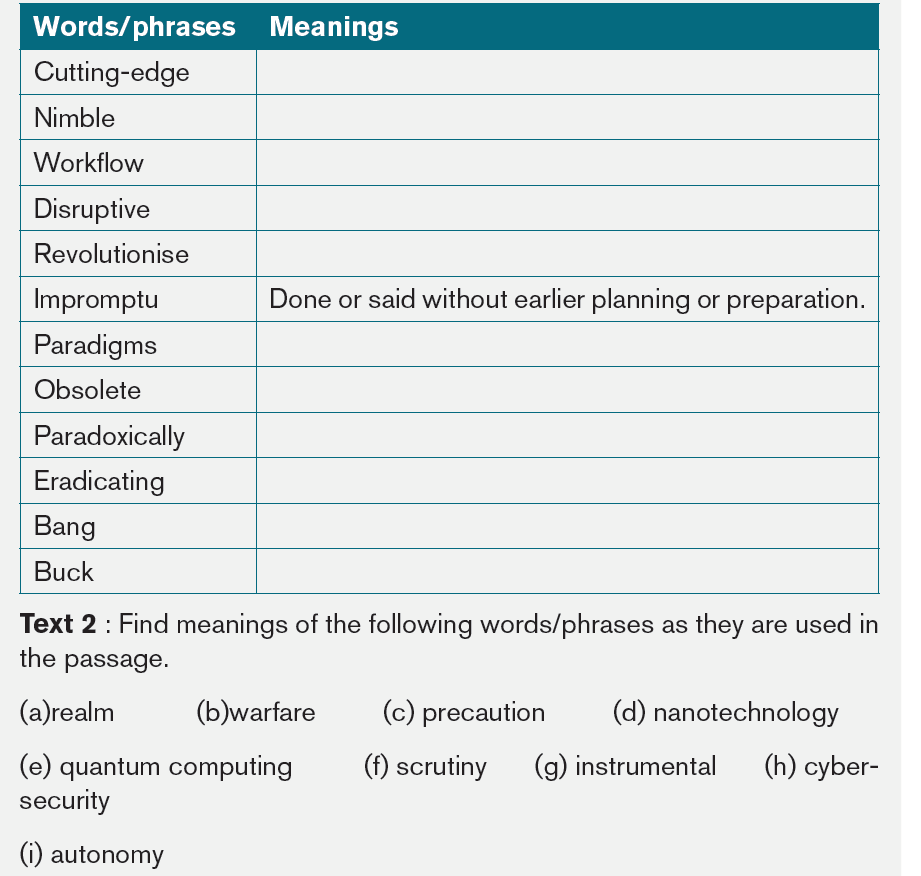

Technology is a fundamental agent of social change, offering new possibilities

to produce, store and spread knowledge. This is particularly clear in the military

realm. Major shifts in military history have often followed ground-breaking

developments in the history of science and technology. If not initially the result

of military research and development, new technologies often find military

applications, which, in some cases, have disruptive effects on the conduct

of warfare. These can be positive and negative effects: progress in military

technology has improved the possibility of precaution in the mobilization and

application of force, but it has also provided more powerful capabilities of harm

and destruction.

Current innovations in artificial intelligence, robotics, autonomous systems,

Internet of things, 3D printing, nanotechnology, biotechnology, material science

and quantum computing are expected to bring social transformations of an

unprecedented scale. For the World Economic Forum, they form no less than

the foundation of a ‘fourth industrial revolution.’ How these technologies may be

used in, and transform, the military and security realms is not yet fully understood

and needs further scrutiny. The capabilities they could provide may directly or

indirectly affect the preconditions for peace, the nature of conflicts and how

insecurity is perceived and managed, by people and states. Monitoring their

development is therefore instrumental to understanding the future of warfare

and global security.

Improving cyber-security and cyber-defence capabilities has recently become

a top priority on the national security agendas of many European states. A wide

range of states are creating dedicated cyber-defence agencies, increasing

cyber-related human and financial resources, and drafting national strategies

that sometimes include developing offensive cyber-capabilities. In this new and

rapidly developing field, the implications of these developments for international

security and disarmament are as yet unclear.

Autonomy in weapon systems was not forgotten in this tech trend. Since 2013,

the governance of Lethal Autonomous Weapon Systems (LAWS) has been

discussed under the framework of the 1980 United Nations Convention on

Certain Conventional Weapons (CCW). However, the discussion remains

at an early stage as most states are still in the process of understanding the

concrete aspects and implications of increasing autonomy in weapon systems.

To support states in their reflection, and more generally to contribute to more

concrete and structured discussions on LAWS at the CCW, SIPRI launched a

research project in February 2016 that looks at the development of autonomy in

military systems in general and in weapon systems in particular.

The project ‘mapping the development of autonomy in weapon systems’ was

designed based on the assumption that efforts to develop concepts and practical

measures for monitoring and controlling LAWS will remain premature without a

better understanding of (1) the technological foundations of autonomy, (2) the

current applications and capabilities of autonomy in existing weapon systems

and (3) the technological, socio-economical, operational and political factors

that are currently enabling or limiting its advances.

Its aim, in that regard, is to provide CCW delegates and the interested public

a ‘reality check on autonomy’ with a mapping exercise that will answer a series

of fundamental questions, such as: What types of autonomous applications are

found in existing and forthcoming weapon systems? What are the capabilities

of weapons that include some level of autonomy in the target cycle, how are

they used or intended to be used and what are the principles or rules that

govern their use?

Adapted from Emerging military and security technologies, by Dr Vincent Boulanin, Dr Sibylle

Bauer, Noel Kelly and Moa Peldán Carlsson.

•• Comprehension questions :

1. What do you understand by “ground-breaking developments”?

2. Discuss positive and negative effects of military technologies.

3. What does “fourth industrial revolution” refer to according to World

Economic Forum?

4. Why do you think it is instrumental to monitor the development of military

technologies?

5. What do you understand by “offensive cyber-capabilities”?

6. Do you think autonomy in weapon systems should be supported?

7. Describe the aim of the project of mapping the development of autonomyin weapon systems.

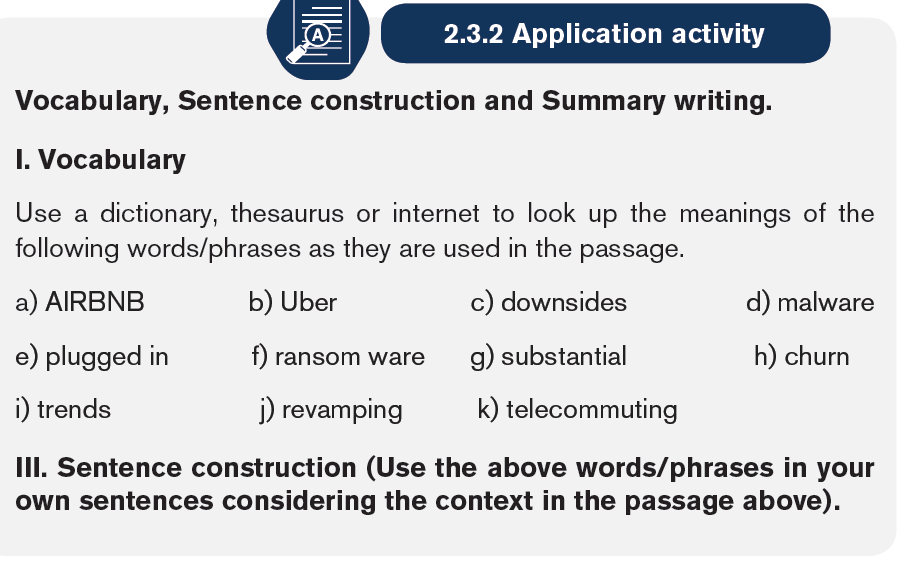

Text 1: The Disadvantages of Using Technology in Business

Modern technology has had an amazing positive impact on business, from

improving productivity, to opening new markets around the world for even the

smallest of businesses, to creating entirely new business models like Airbnb

and Uber. But advanced technology has its negatives as well. While its negative

aspects probably shouldn’t stop you from taking advantage of technology, you

should certainly be aware of the potential downsides so you can take steps

to minimize them. Those negative aspects include: Distraction, security risks,

expensive obsolescence and destruction of social boundaries.

Everyone with a smartphone, laptop, tablet or desktop computer has access to

the incredible world of the internet in most of workplaces. Hopefully, employers

and employees use this access for research and communication in the service

of their business.

But the internet can be a powerful distraction as well, as employees are faced with

the temptation of checking Face book, reading the latest tweet storm on Twitter

or watching cute cat videos on YouTube. In some companies, approximately

half of all office employees spend an hour or more per day on non-work-related

internet sites.

The security risks of high technology are also a big concern. Our online devices

are a two-way street, giving employers and their staff access to the outside

world, but also allowing outsiders into their place of business. Emails routinely

contain malware that can infect computer systems. Personal devices such as

USB drives might get infected with a virus at an employee’s home and then

plugged in to an office machine, transferring the virus to the company’s systems.

Important files can be stolen, as happened to Sony when sensitive internal emails

were revealed to the world, or Equifax, which had the private information of

millions of people stolen through electronic snooping. Bad actors can introduce

ransom ware that freezes up a system, promising to unlock it only after payment

of a substantial fee. Perhaps the eeriest sorts of intrusions are computer viruses

that take remote control of companies’ operations. Malware has been known to

crash electric utilities, interfere with hospitals and police stations and even take

control of computer-operated, self-driving cars.

Expensive obsolescence is another threat to companies that use new

technologies. Technological change advances very rapidly, which means that

the technology you invest in today may seem to be out-of-date almost the

moment it is installed and up and running. Technological churn – new phones,

new laptops, the latest software – keeps the company current with the latest

trends. But it’s also a sizable outlay of cash, not only for the technology itself,

but also for the revamping of related systems. Employees need to be trained

on new systems, IT staff needs to update its certifications and capabilities, and

security protocols have to be revised as well.

On top of the previously mentioned disadvantages there is destruction of

social boundaries. The ability to communicate instantly with just about anyone,

anywhere can sometimes interfere with the ordinary dynamics of face-to-face

communication. Technology may mean fewer employees show up in person to

meetings. It may also mean fewer people in the employer’s office, as employees

take advantage of telecommuting options. Although these capabilities can

actually improve productivity in some cases, many people find they miss the

more social aspects of a traditional company where staff and clients showed up

in person to do business.

Also on the list of potential negatives is rather the opposite of isolation. The fact

that you and your staff are reachable 24 hours a day, seven days a week, 365

days a year can make for an unpleasant loss of boundaries, as managers and

clients come to expect full service no matter the time of day or time of year.

Adapted from The Security Risks of High Technology by David Sarokin.

•• Comprehension questions

1. Should negative aspects of technology stop us from taking advantage of

technology? Explain.

2. Outline the four negative aspects of technology as mentioned in the

passage.

3. Explain how technology can distract employees.

4. To what extent are our online devices vulnerable?

5. Explain how expensive obsolescence is threat to companies that use

new technologies.6. Discuss the social negative impacts of technology at workplace.

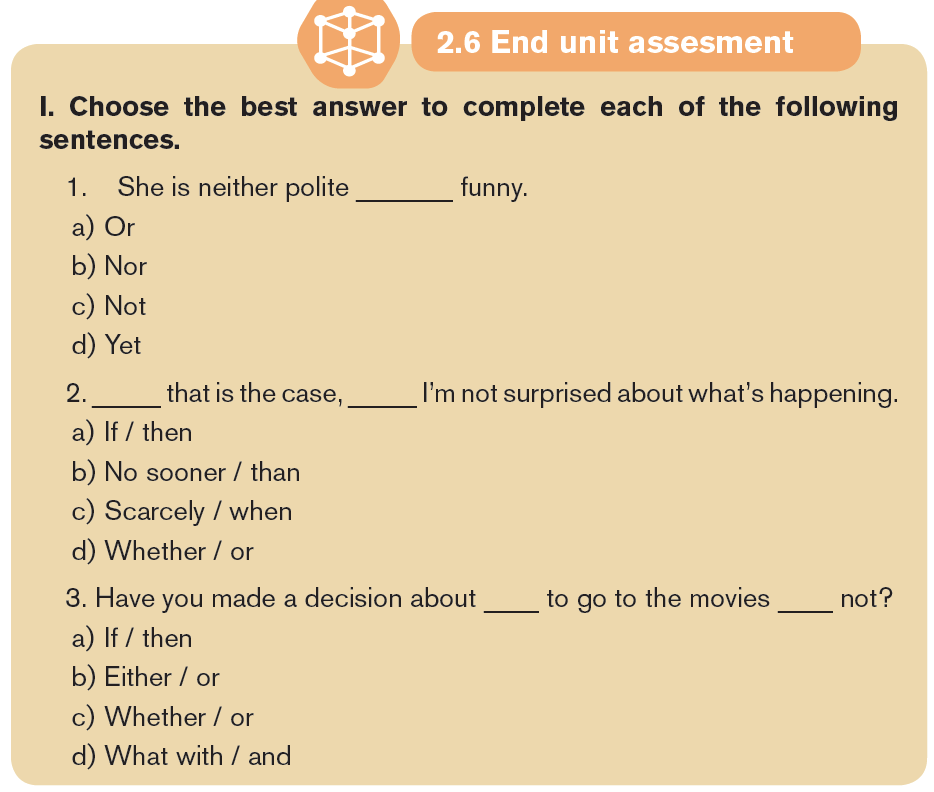

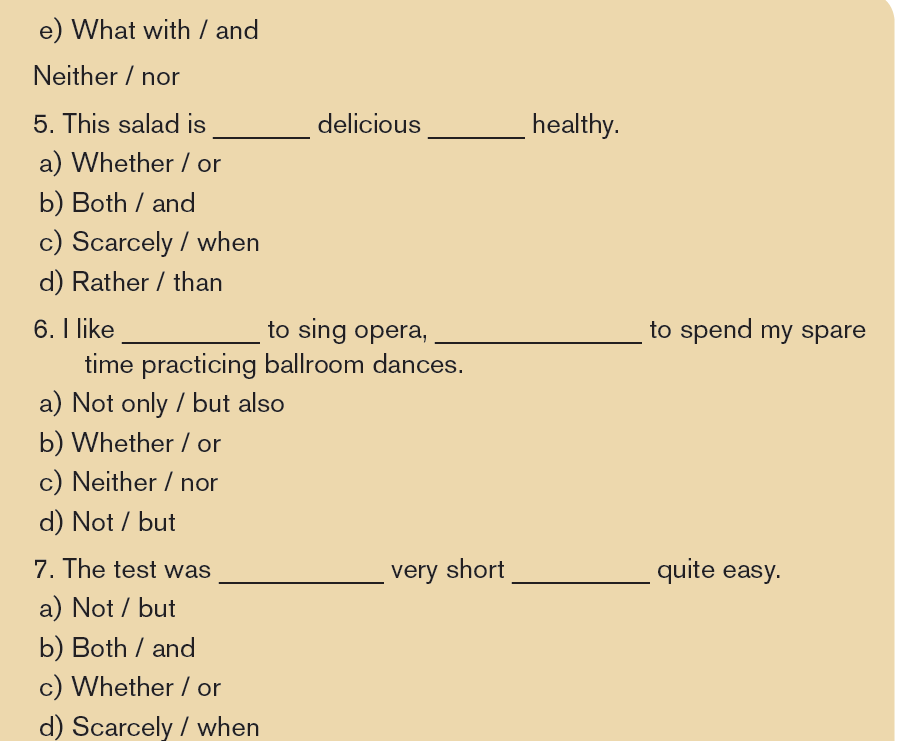

2.4. Language Structure : Correlative connectors

Correlative conjunctions are sort of like tag-team conjunctions. They come in

pairs, and you have to use both of them in different places in a sentence to make

them work. They get their name from the fact that they work together (co-) and

relate one sentence element to another. There are many correlative connectors

but the most common are:

•• Either... or

•• Neither... nor

•• Not only ... but also

•• Both ... and

•• the more ... the less

•• the more ... the more

•• no sooner ... than

•• whether ... or

•• rather ... than

•• as ... as

•• such ... that

•• scarcely ... when

•• as many ... as

Examples

1. AI can be categorized as either weak or strong.

2. Both the planning of technology projects and its uses are costly.

3. Computers are not only useful but also stressful.

4. Not only is management of waste technological tools expensive but also

harmful to the environment.

5. No sooner did he enter the room than my tablet disappeared.

6. Do you care whether we use a smart camera or a projector in the

conference?

7. The more you think about it, the less likely you are to take action.

8. The more it rains, the more serious the problems become.

9. Wouldn’t you rather take a chance to owe me than be in debt to Michael?

10. Using a computer isn’t as fun as using a tablet.

11. Such was the nature of their volatile relationship that they never would

have made it even if they’d wanted to.

12. I had scarcely walked in the door when I got an urgent call and had to run

right back out again.

13. There are as many self-driving cars in Europe as there are in USA.

Exercise : Complete each sentence using the correlative connector pair from

the parenthesis:

1. I plan to take my vacation ………… in June ………… in July. (whether /

or, either / or, as / if)

2. ………… I’m feeling happy ………… sad, I try to keep a positive attitude.

(either / or, whether / or, rather / than)

3. ………… had I taken my shoes off ………… I found out we had to leave

again. (no sooner / than, rather / than, whether / or)

4. ………… only is dark chocolate delicious, ………… it can be healthy.

(whether / or, not / but also, just as / so)

5. I will be your friend ………… you stay here………… move away. (either/

or, whether/or, neither/nor)

6. ………… flowers ………… trees grow during warm weather. (neither /

nor, both / and, not / but also)

7. ………… do we enjoy summer vacation, …………we ………… enjoy

winter break. (whether / or, not only / but also, either / or)

8. I knew it was going to be a bad day because I ………… over slept

………… missed the bus.(not only/but also, neither/nor, whether/or)

9. It’s ………… going to rain ………… snow tonight. (The more / the less,

either / or, both / and)

10. Savoury flavours are ………… sweet…… sour. (often / and, neither /

nor, both / and)

2.5 Spelling and pronunciation

A. Spelling

Identify and correct misspelled words in the following paragraph

Everyone with a smartiphone, luptop, tablette or desktop computer has access to

the incredible world of the Internet in most of workplaces. Hopefully, employers

and employees use this access for research and comunication in the service

of their business. But the Internet can be a powerful distruction as well, as

employees are faced with the temptetion of checking facebook, reading the

latest tweet storm on Twitter or watching cute cat videos on YouTube. In some

companies, approximately half of all office employees spend an hour or more

per day on non-work-related internet cites.

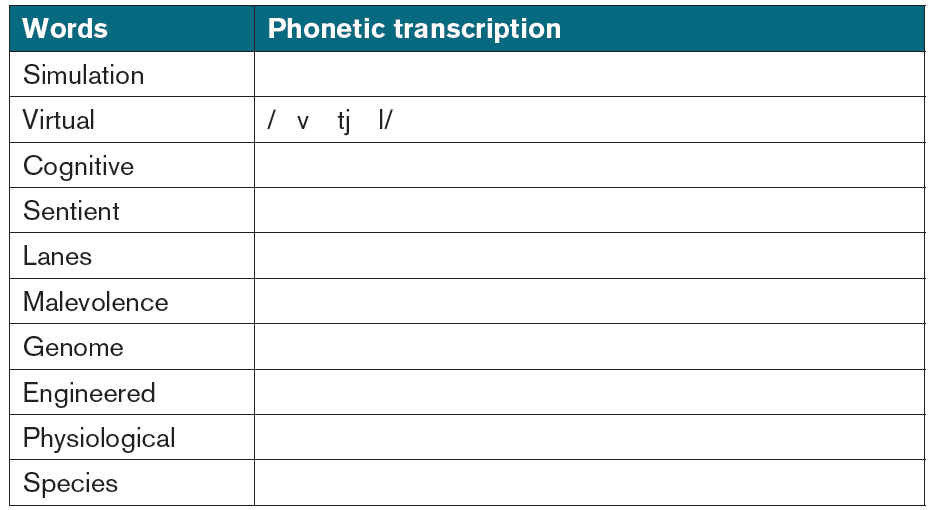

B. Phonetic transcription

Give the missing phonetic transcription of the words in the table below andpractise pronouncing them correctly.